Are you looking for an End-to-End Data Analysis Project with source codes to inspire and guide you in your project? Look no further because you have come to the right place. This project is an excellent step-by-step tutorial to help you accomplish your data science and machine learning (ML) project. Therefore, its originality and uniqueness will greatly inspire you to innovate your own projects and solutions.

1.0. Introduction

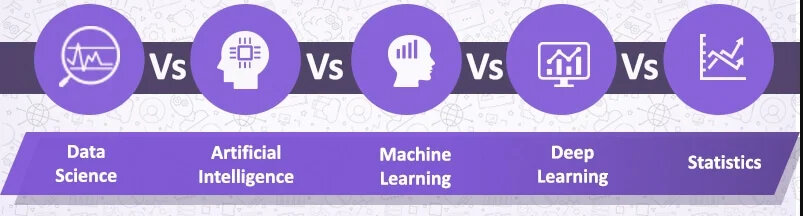

In the ever-evolving landscape of technology, the temptness of Artificial Intelligence (AI), Machine Learning (ML), and Data Science has captivated the ambitions of professionals seeking career transitions or skill advancements.

However, stepping into these domains has its challenges. Aspiring individuals like me often confront uncertainties surrounding market demands, skill prerequisites, and the intricacies of navigating a competitive job market. Thus, in 2023, I found myself in a data science and machine learning course as I tried to find my way into the industry.

1.1 Scenario Summary

Generally, at the end of any learning program or course, a learner or participant has to demonstrate that they meet all the criteria for successful completion by way of a test. The final test is for the purpose of certifications, graduation, and subsequent release to the job market. As a result, a test, exam, or project deliverable is administered to the student. Then, certification allows one to practice the skills acquired in real-life scenarios or situations such as company employment or startups.

Applying the above concept to myself, I certified at the end of my three-month intermediary-level course in 2023. The course is on “Data Science and Machine Learning with the Python Programming Language.” The Africa Data School (ADS) offers the course in Kenya and other countries in Africa.

At the end of the ADS course, the learner has to work on a project that meets the certification and graduation criteria. Therefore, from the college guidelines, it goes without saying that for me to graduate, I had to work on either;

- An end-to-end data analysis project, OR

- An end-to-end machine learning project.

The final project product was to be presented as a Web App deployed using the Streamlit library in Python. To achieve optimum project results, I performed it in two phases: the data analysis phase and the App design and deployment phase.

1.2. End-to-End data analysis project background: What inspired my project

Last year, 2023, I found myself at a crossroads in my career path. As a result, I didn’t have a stable income or job. For over six and a half years, I have been a hybrid freelancer focusing on communication and information technology gigs. As a man, when you cannot meet your financial needs, your mental and psychological well-being is affected. Thus, things were not good or going well for me socially, economically, and financially. For a moment, I considered looking for a job to diversify my income to cater to my nuclear family.

Since I mainly work online and look for new local gigs after my contracts end, I started looking for ways to diversify my knowledge, transition into a new career, and subsequently increase my income. From my online writing gigs and experience, I observed a specific trend over time and identified a gap in the data analysis field. Let us briefly look at how I spotted the market gap in data analysis.

1.2.1. The identified gap

I realized that data analysis gigs and tasks that required programming knowledge were highly paid. However, only a few people bid on them on different online job platforms. Therefore, the big question was why data analysis jobs, especially those requiring a programming language, overstayed on the platforms with few bids. Examples of the programming languages I spotted an opportunity in most of those data analysis jobs include Python, R, SQL, Scala, MATLAB, and JavaScript. The list is not exhaustive – more languages can be found online.

As a result of the phenomenon, I started doing some research. In conclusion, I realized that many freelancers, I included, lacked various programming skills for data analysis and machine learning. To venture into a new field and take advantage of the gap required me to learn and gain new skills.

However, I needed guidance to take advantage of the market gap and transition into the new data analysis field with one of the programming languages. I did not readily find one, so I decided to take a course to gain all the basic and necessary skills and learn the rest later.

Following strong intuition coupled with online research about data science, I landed at ADS for a course in Data Science and Machine Learning (ML) with Python Programming Language. It is an instructor-led intermediary course with all the necessary learning resources and support provided.

Finally, at the end of my course, I decided to come up with a project that would help people like me to make the right decisions. It is a hybrid project. Therefore, it uses end-to-end data analysis skills and machine learning techniques to keep it current with financial market rates.

I worked on it in two simple and straightforward steps and phases. They include:

1.2.2. Phase 1: End-to-End Data Analysis

– Dataset Acquisition, Analysis, and Visualization using the Jupyter Notebook and Anaconda.

1.2.3. Phase 2: App Design and Deployment

– Converting the Phase 1 information into a Web App using the Streamlit library.

Next, let me take you through the first phase. In any project, it is important to start by understanding its overall objective. By comprehending the goal of the project, you can determine if it fits your needs. It is not helpful to spend time reading through a project only to realize that it is not what you wanted.

Therefore, I’ll start with the phase objective before moving on to the other sections of the project’s phase one.

1.3. Objective of the end-to-end data analysis project phase 1

The project analyzes and visualizes a dataset encompassing global salaries in the AI, ML, and Data Science domains to provide you with helpful data-driven insights to make the right career decisions. It delves into critical variables, including the working years, experience levels, employment types, company sizes, employee residence, company locations, remote work ratios, and salary in USD and KES of the dataset. Thus, the final results were useful data visualizations for developing a web application.

2.0. The End-to-End Data Analysis Process.

The first phase was the data analysis stage, where I searched and obtained a suitable dataset online.

Step 1: Explorative Data Analysis (EDA) Process

2.1 Dataset choice, collection, description, and loading.

The project’s data is obtained from the ai-jobs.net platform. For this project, the link used to load the data explored is for the CSV file on the platform’s landing page. Nonetheless, the dataset can also be accessed through Kaggle. Since the data is updated weekly, the link will facilitate continuous weekly data fetching for analysis in order to keep the Ultimate Data Jobs and Salaries Guider Application updated with the current global payment trends.

Dataset Source = https://ai-jobs.net/salaries/download/salaries.csv

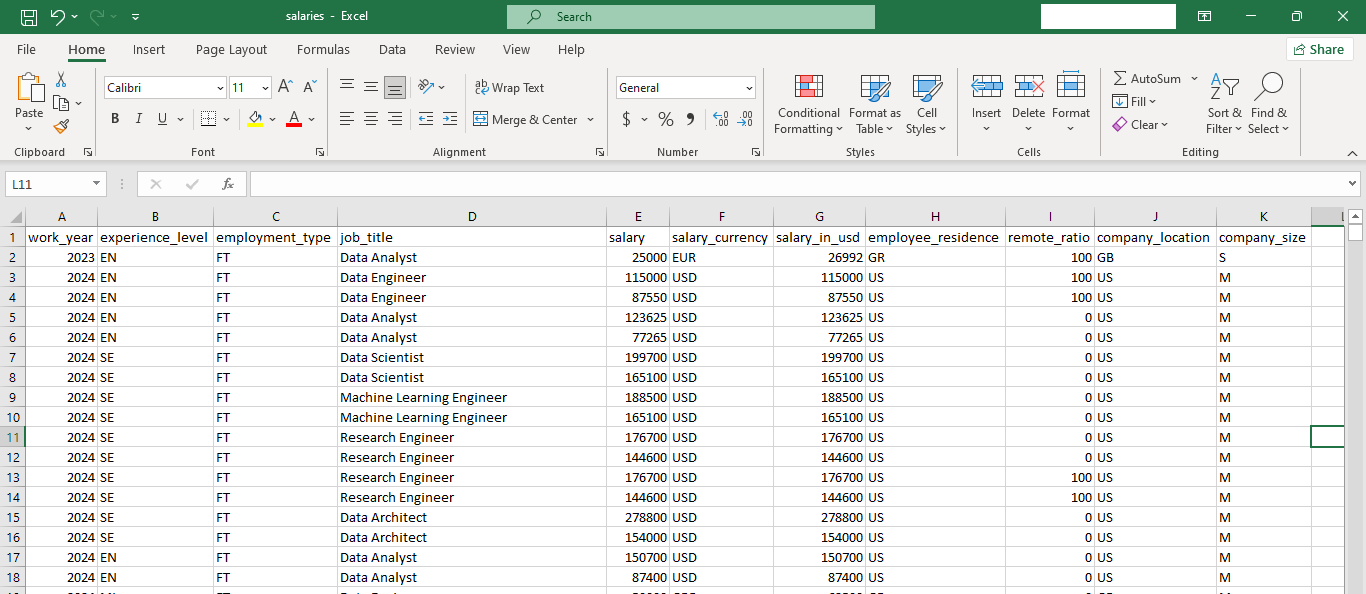

2.1.1 Raw dataset description

The dataset contained 11 columns with the following characteristics:

- work_year: The year the salary was paid.

- experience_level: The experience level in the job during the year.

- employment_type: The type of employment for the role.

- job_title: The role worked during the year.

- Salary: The total gross salary amount paid.

- salary_currency: The currency of the salary paid is an ISO 4217 currency code.

- salary_in_usd: The salary in USD (FX rate divided by the average USD rate for the respective year via data from fxdata.foorilla.com).

- employee_residence: Employee’s primary country of residence as an ISO 3166 country code during the work year.

- remote_ratio: The overall amount of work done remotely.

- company_location: The country of the employer’s main office or contracting branch as an ISO 3166 country code.

- company_size: The average number of people that worked for the company during the year.

2.1.2 Data loading for the end-to-end data analysis process

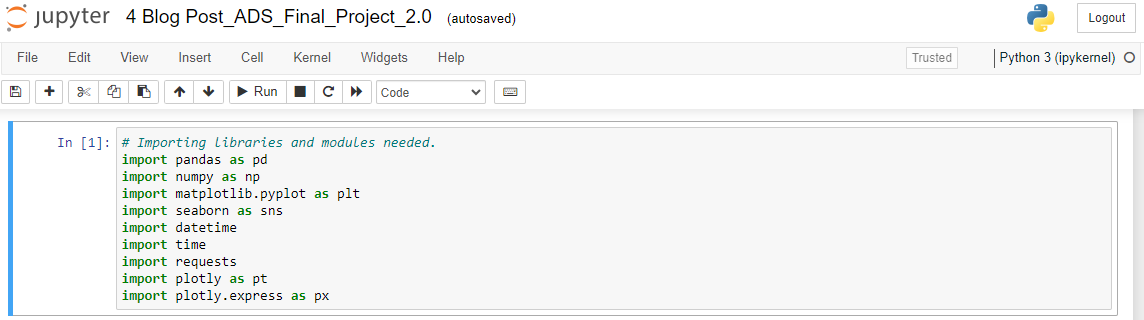

First, I imported all the necessary libraries and modules to load, manipulate, and visualize the data in cell 1 of the Jupyter Notebooks.

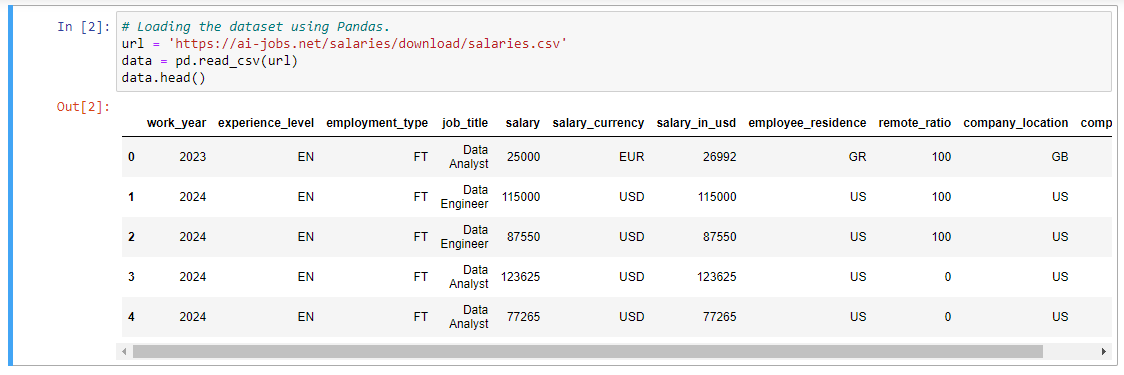

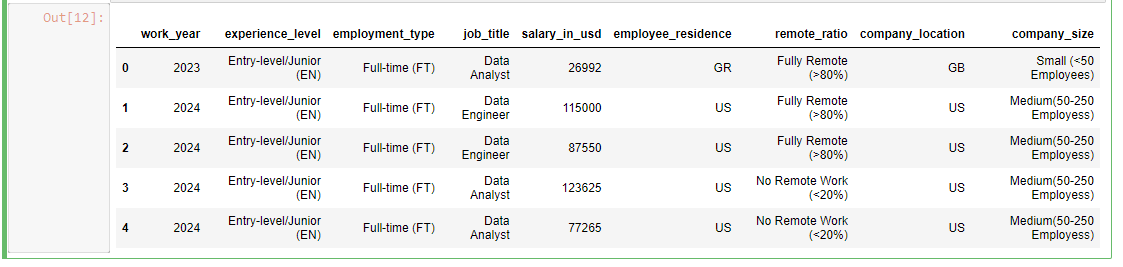

Then, I loaded the data using Pandas in Cell 2 and received the output below. The Pandas “.head ()” function displayed the first five rows in the dataset.

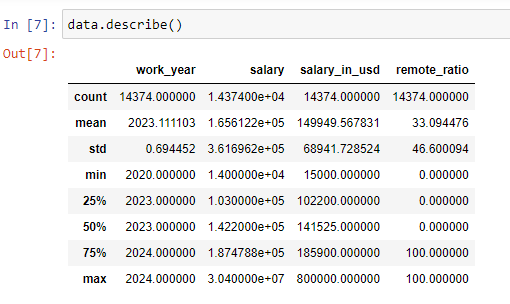

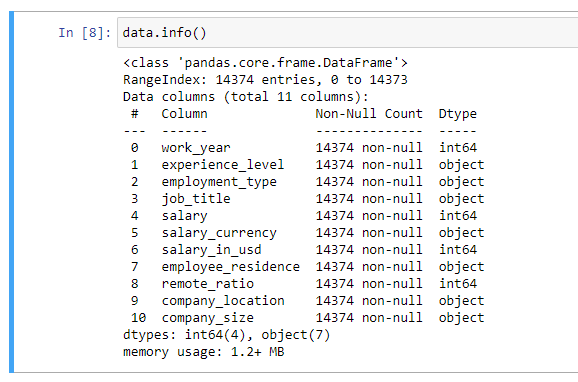

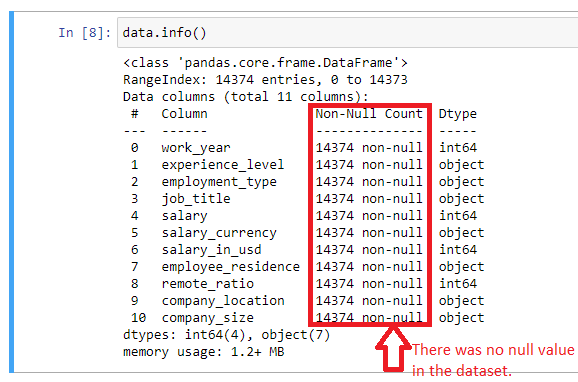

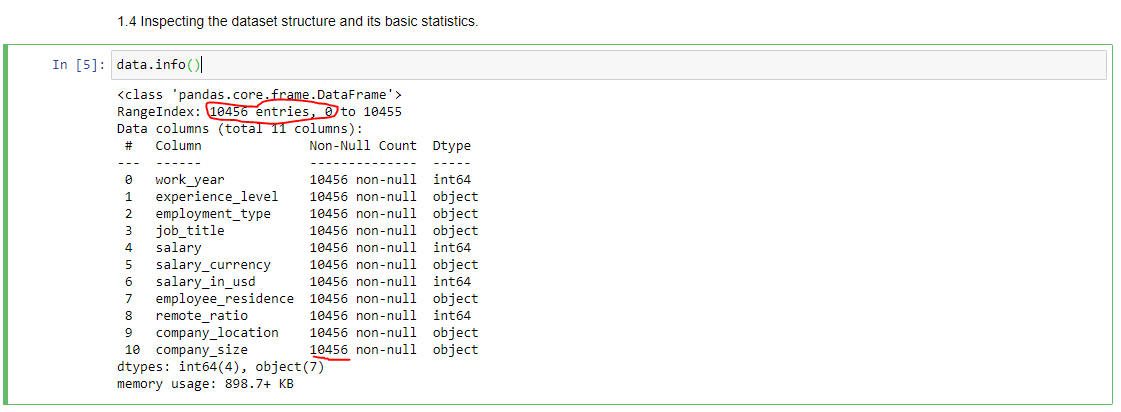

After loading the salaries dataset from the URL, I used the Pandas library to study it. I analyzed the dataset’s frame structure, basic statistics, numerical data, and any null values present to understand its composition before proceeding with the analysis process. The results showed that there were:

I. Four columns out of the total eleven with numerical data.

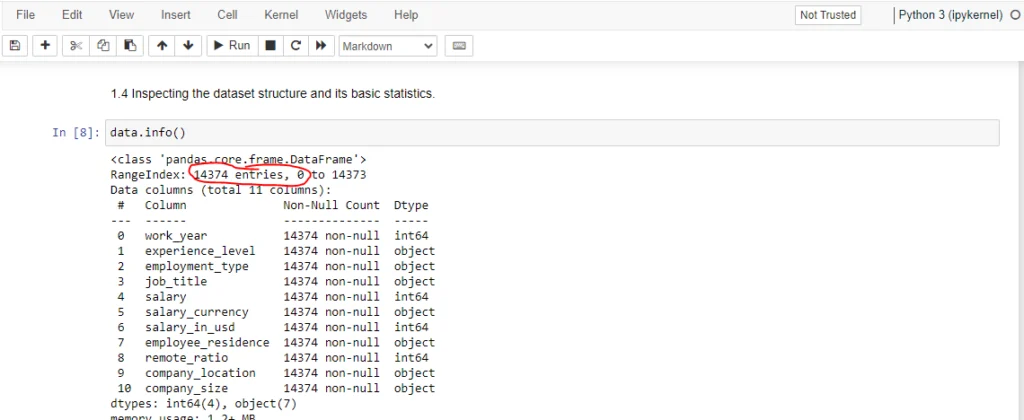

ii. Eleven columns in total contain 14,373 entries. Four with numerical data and seven with object data types.

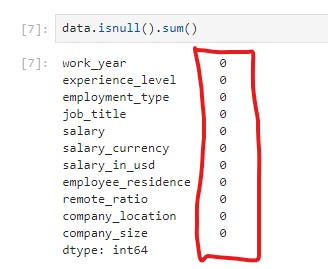

iii. There was no missing data in the fields of the 11 columns of the dataset. You can confirm this in the screenshot below.

2.2. Conclusions – EDA Process.

Based on the above results, the dataset does not contain any missing values. The categorical and numerical datatypes are well organized, as shown in the above outputs. The dataset has eleven columns—4 with integer datatypes and 7 with object datatypes. Therefore, the data is clean, ready, and organized for use in the analysis phase of my project.

Step 2: Data Preprocessing

The main preprocessing activities performed were dropping the unnecessary columns, handling the categorical data columns, and feature engineering.

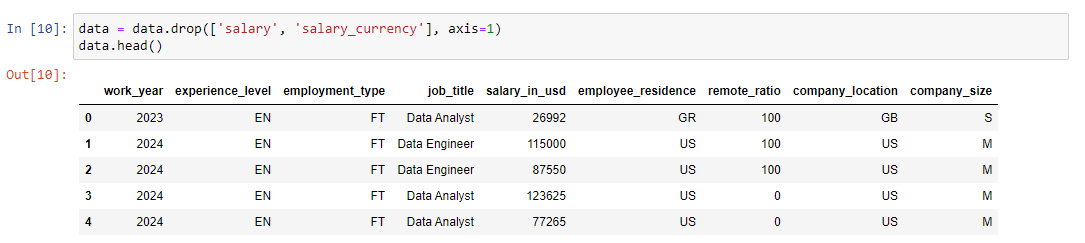

2.2.1. Dropping the unnecessary columns

The columns dropped were the salary and salary_currency. I dropped them because of one main reason. The salary column had different currencies depending on employee residence and company location, and they were converted into USD from other currencies. Thus, the dropped columns were unnecessary because I only needed the salary amount in one currency.

2.2.2. Handling the categorical data columns

I developed a code snippet summarizing and displaying all the categorical columns in the salaries dataset. The first five entries were printed out and indexed from zero, as shown in the sample below.

2.2.3. The engineered features in the end-to-end data analysis process

Making the data in the dataset more meaningful and valuable to the project is crucial. Therefore, I engineered two new and crucial features in the dataset. As a result of the engineering process, our new features were full name labeling and salary conversion. Now you have a clue about the engineered features. Next, let us describe how each feature came about and into existence.

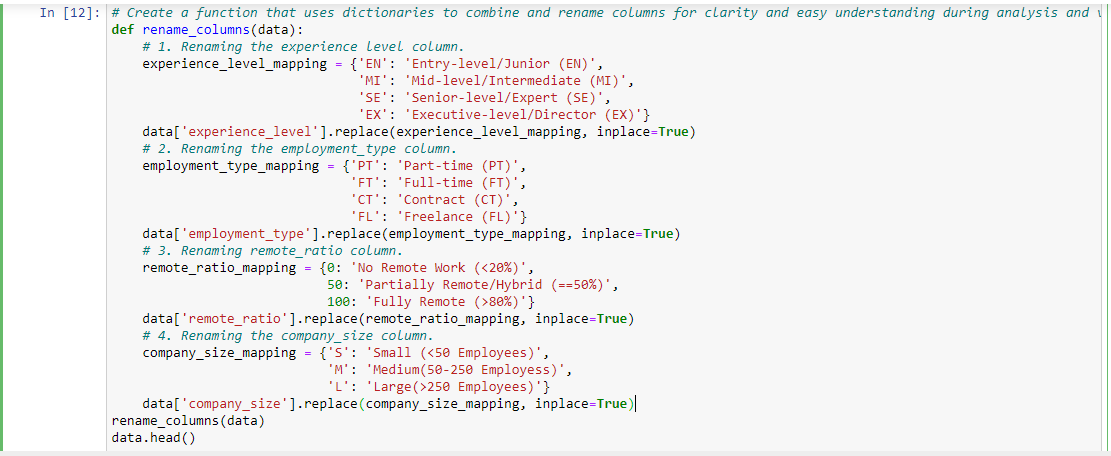

2.2.3.1. Full Name Labeling:

Initially, the column titles in the dataset were written in short forms by combining the title initials. For example, FT was the short form name for the full-time column, and so on. Thus, I took all the titles written in short form using initial letters, wrote them in their full names, and added the initials at the end of the names. For example, I changed “FT” to Full Time (FT). This ensured proper labeling, understanding, and comprehension, especially during data visualizations.

The Python code snippet below was used for full naming.

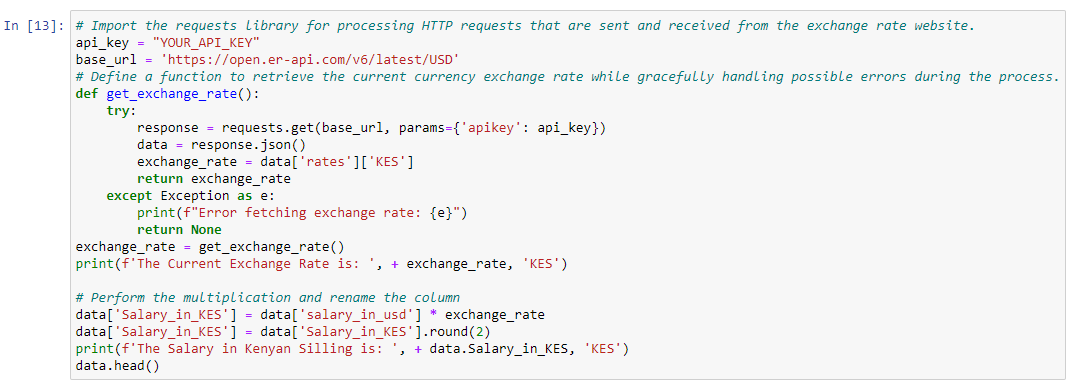

2.2.3.2. Salary Conversion:

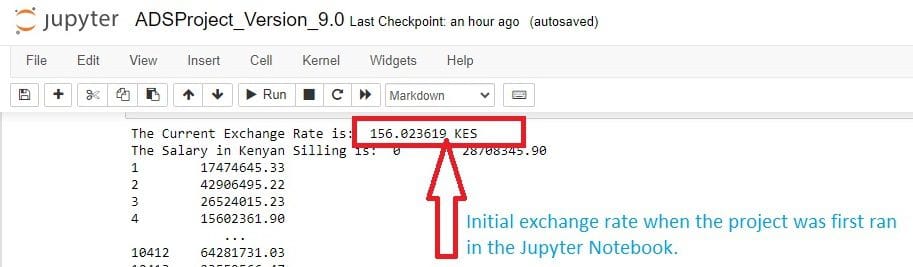

The initial salary column was in USD dollars. Similarly, just like in the previous feature, I came up with a method of changing the “salary_in_usd” column into Kenyan Shillings and renamed it “Salary_in_KES.” Since the dataset is updated weekly, the conversion process was automated. A function was created that requests the current USD Dollar exchange rate versus the Kenyan Shilling and multiplies it by the salary values in dollars to get the salary value in Kenyan money.

The function uses an API Key and a base URL for a website that requests the current exchange rate, prints it on the output.

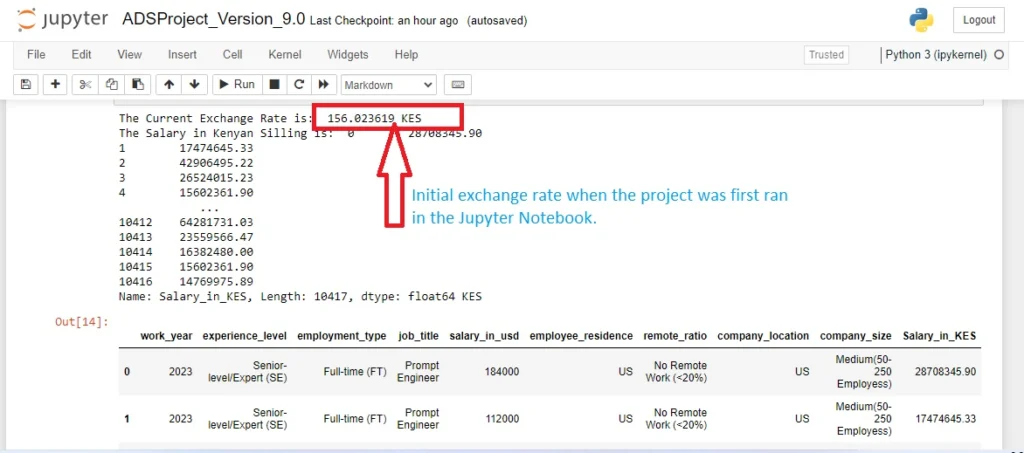

Then, the function multiplies the exchange rate obtained with salary calculated in USD dollars to create a new column named “Salary_in_KES.” As a result, the screenshot below shows the new column which circled in red color.

Therefore, every time the data-jobs guide application is launched, the process will be repeated, and the output will be updated accordingly.

Next, let us briefly proof if the automation really occurs for both the dataset and the exchange rate above.

2.2.3.3. Proof the automated processes are effective in their work in the project

This was proven during the end-to-end data analysis process and web application development. This is because the current value was printed out every time the data analysis Jupyter Notebook was opened and the cell ran.

Exchange rate automation confirmation

As mentioned earlier, the first output result was captured in November 2023. The exchange rate was 1USD = 156.023619KES

As I write this post in March 2024, the feature gives back an exchange rate of 137.030367 KES. See the screenshot below.

Let us find the difference by taking the initial amount minus the current exchange rate. That is 156.023619 – 137.030367 = KES 18.993252. At this moment, the dollar has depreciated against the Shilling by approximately 19 KES.

Guide Publication Date

As you may have noticed from the beginning of our guide, I published it in October. But I’ve noted above that I wrote in March 2024 when calculating the difference. Yes, that is true you got it right. However, I pulled the whole site down for unavoidable reasons, and now I’m creating the posts again. I’m doing this to maintain consistency of the results. Later, I will also update it again with 2024 data.

It is important to note that the process is constant.

Proof that the dataset is updated weekly

The dataset is frequently updated as the main data source is updated. To prove this fact, the total entries in the dataset should increase with time. For example, the screenshot below shows 10456 entries in November 2023.

Similarly, the following screenshot shows 14354 entries in March 2024. This is an increase in the dataset entries thus the changes are automatically reflected in our project.

Next, let us find the difference. The updated entries are 14374 – 10456 initial entries =

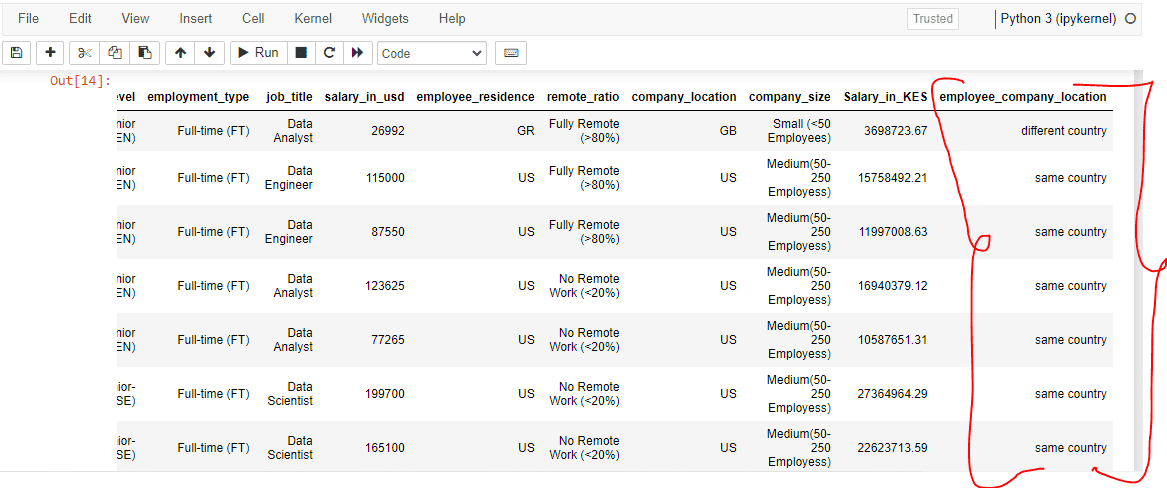

2.2.3.4. Employee Company Location Relationship:

I created a new dataset column named “employee_company_location.”

The program created checks and indicates in the new column if an employee comes from the company location. Therefore, this is true if the employee residence and country codes are the same in the dataset. For example, in the screenshot below, the first person resided in a country different from the company location.

Step 3: Data Visualization

Here, we are at the last step of phase 1. I hope you have already learned something new and are getting inspired to jump-start your end-to-end data analysis project. Let me make it even more interesting, energizing, and motivating in the next graphical visualization stage. In the next section, I’m going to do some amazing work, letting the data speak for itself.

I know you may ask yourself, how? Don’t worry because I will take you through step by step. We let the data speak by visualizing it into precise and meaningful statistical visuals. Examples include bar charts, pie charts, line graphs,

In this project, I developed seven critical dimensions. The accompanying screenshot figures show the code snippet I developed to process the data and visualize each dimension.

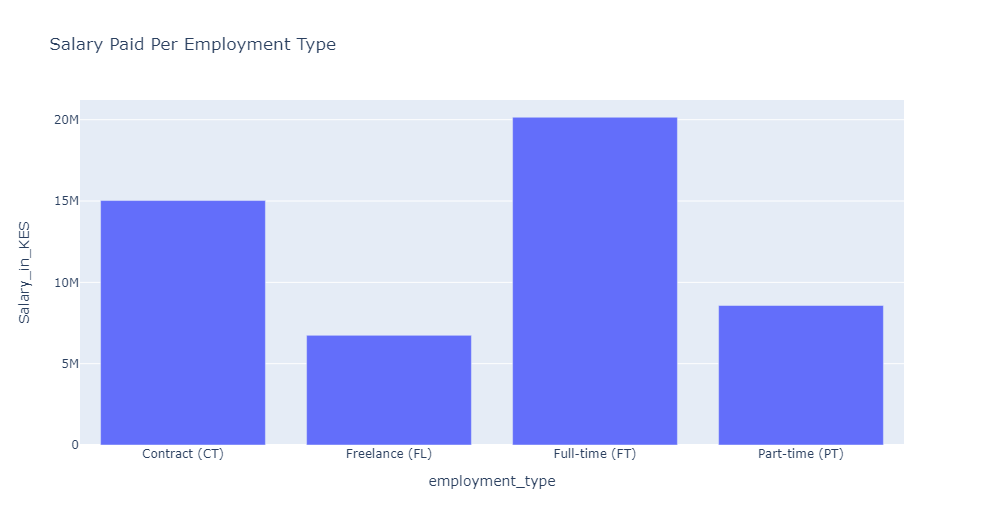

1. Employment Type:

Unraveling the significant salary differences based on employee roles. The roles present in the data were full-time (FT), part-time (PT), contract (CT), and freelance (FL) roles.

Code snippet for the visualization above.

2. Work Years:

Examining how salaries evolve over the years.

Code snippet for the visualization above.

3. Remote Ratio:

Assessing the influence of remote work arrangements on salaries.

Code snippet for the visualization above.

4. Company Size:

Analyzing the correlation between company size and compensation.

Code snippet for the visualization above.

5. Experience Level:

Understanding the impact of skill proficiency on earning potential.

Code snippet for the visualization above.

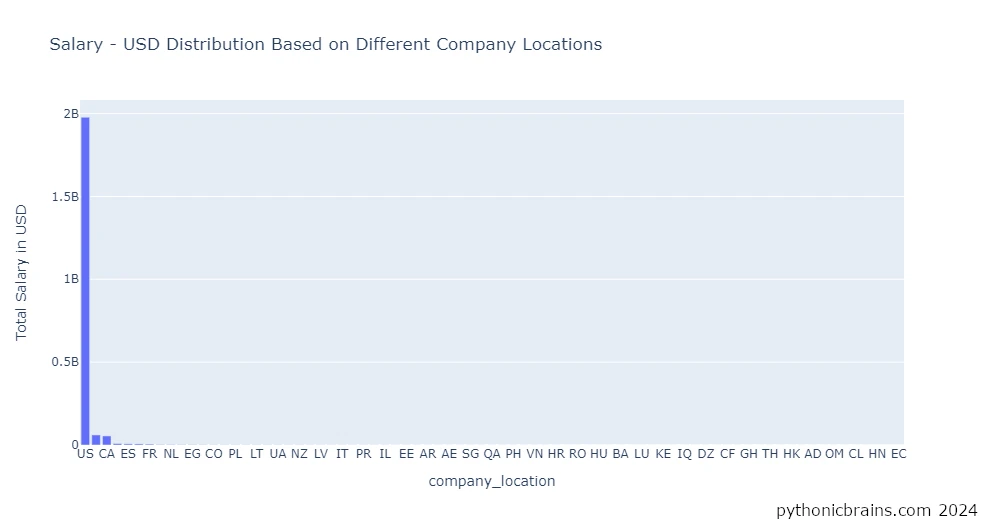

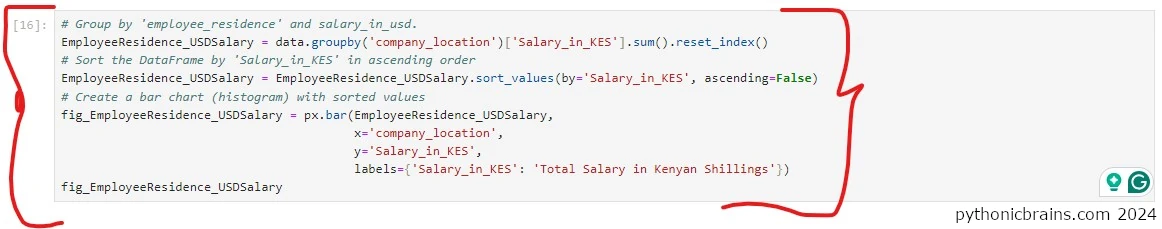

6. Company Location:

Investigating geographical variations in salary structures.

Code snippet for the visualization above.

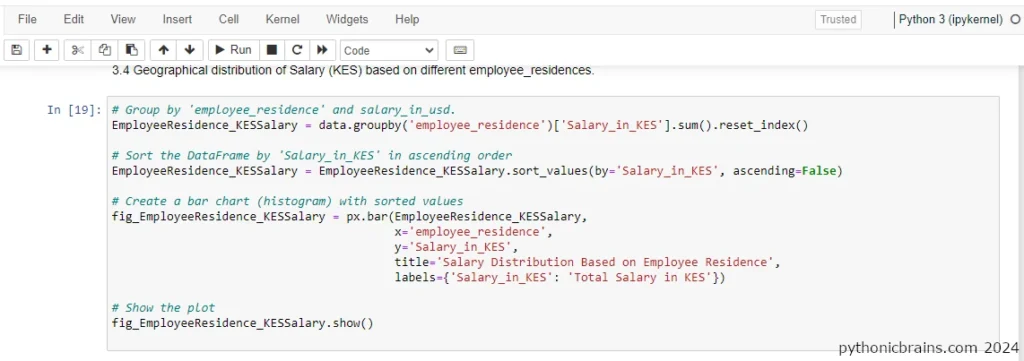

7. Employee Residence:

Exploring the impact of residing in a specific country on earnings.

Code snippet for the visualization above.

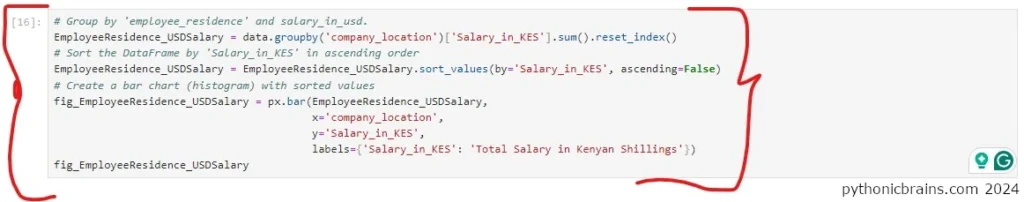

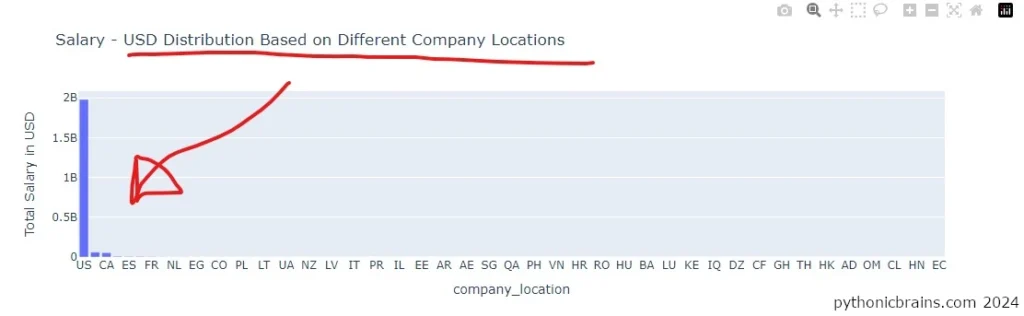

8. Salary (USD) – Distribution Per Company Location:

Investigating howearnings are distributed based on employee residence and company location.

Code snippet for the visualization above.

9. Salary (KES) – Distribution Based on Different Company Locations:

Investigating howearnings in Shillings are distributed based on employee residence and company location.

Code snippet for the visualization above.

In Summary:

We’ve come to the end of phase 1 – in other words, the end-to-end data analysis project phase. Generally, you have gained in-depth skills in how you can find, acquire, clean, preprocess, and explore a dataset. Therefore, considering this project phase alone, you can start and complete an end-to-end data analysis project for your purposes.

Whether for your job or class work. Additionally, with the source code snippets, it becomes easy to visualize your data based on them. What I mean is that your dataset may be different, but they directly help you produce similar or better visualizations. In my case and for this project design, the phase opens the door to the second project milestone, phase 2.