Are you looking for a guide on how to build a web application for your data analysis project? Or are you just looking for a data analysis and machine learning project to inspire your creativity? Then, if your answer is “Yes,” this step-by-step guide is for you. You’ve come to the right place where we guide, inspire, and motivate people to venture into data science and ML. For your information, ML is the acronym for Machine Learning. Having that said, let’s move on with the project.

The application landing page:

Upon opening the application on the web, you will see the app name and tagline. They are listed below and shown in the screenshot after the name and tagline.

Name:

The Ultimate Career Decision-Making Guide: – Data Jobs

Tagline:

Navigating the Data-Driven Career Landscape: A Deep Dive into Artificial Intelligence (AI), Machine Learning (ML), and Data Science Salaries.

Project introduction

This is the second part of the project. As you can remember from the introductory part of the project, I divided the project into two parts. In phase one, I worked on the End-to-End data analysis part of the project using the Jupyter Notebook. As mentioned earlier, it formed the basis for this section – web application development.

Thus, to remind ourselves, I performed the explorative data analysis (EDA) process in step 1 and data preprocessing in step 2. Finally, I visualized the data in step 3. I created nine key dimensions to create this web application to share my insights with you and the world.

I recommend you visit the application using the link at the end of this guide.

What to expect in this Web Application building guide.

A step-by-step guide on how to design and develop a web application for your data analysis and machine learning project. By the end of the guide, you’ll learn how the author used the Python Streamlit library and GitHub repository to create an excellent application. Thus, gain skills, confidence, and expertise to come up with great web apps using free and readily available tools.

The Challenge

Today, there are different challenges or issues that hinder people from venturing into the fields of Artificial Intelligence (AI), Machine Learning (ML), and Data Science. One primary challenge individuals face is understanding the nuanced factors that influence career progression and salary structures within these fields.

Currently, the demand for skilled professionals in AI, ML, and Data Science is high, but so is the competition. For example, 2024 statistics show that job postings in data engineering have seen a 98% increase while AI role postings have surged 119% in 2 years. Additionally, 40% or 1 million new machine learning jobs are expected to be created in the next five years.

Therefore, these industry/field and market trends show an increase in demand for AI, ML, and Data Science. The growth needs more experts and professionals; thus, it is necessary to act now to join and take advantage of the trends. But how do you do that? The question will be directly or indirectly answered in the subsequent sections of this guide.

Navigating this landscape requires a comprehensive understanding of how various elements such as years of experience, employment type, company size, and geographic location impact earning potential. That’s why I developed this solution for people like you.

The Solution: Web Application

To address these challenges, the project embarks on a journey to demystify the intricate web of factors contributing to success in AI, ML, and Data Science careers. By leveraging data analytics and visualization techniques, we aim to provide actionable insights that empower individuals to make informed decisions about their career trajectories. Keep it here to learn more. Next, let’s look at the project objective.

The project’s phase 2 objective.

The goal is to create a simple web application using the Python Streamlit library to uncover patterns and trends that can guide aspiring professionals. The application will be based on comprehensive visualizations highlighting salary distributions prepared in phase 1 of the project.

The project’s phase 2 implementation.

Web Application: the streamlit library in Python

Generally, Streamlit is the Python library that simplifies the process of building interactive web applications for data science and machine learning projects. For example, in this project, I used it for a data science project. It allowed me as a developer to create dynamic and intuitive user interfaces directly from Python scripts without needing additional HTML, CSS, or JavaScript.

With Streamlit, users can easily visualize data, create interactive charts, and incorporate machine learning models into web applications with minimal code. For example, I used several Python functions in this project to generate insights from visualizations and present them in a simple web application.

This library is particularly beneficial for data scientists and developers who want to share their work or deploy models in a user-friendly manner, enabling them to prototype ideas and create powerful, data-driven applications quickly. This statement describes my project’s intentions from the beginning to the end.

Creating the web application in Microsoft VS Code.

To achieve the project’s objective, I use Six precise step-by-step procedures. Let us go through each one of them in detail in the next section.

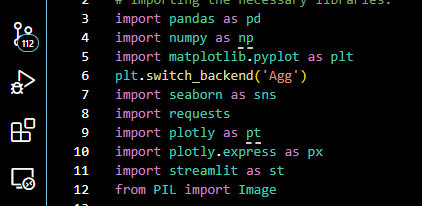

Step 1: Importing the necessary libraries to build the web application

The first thing to do is import the Python libraries required to load and manipulate data.

These are the same libraries used in the end-to-end data analysis process in phase 1 of the project. It’s important to note that Streamlit has been added to this phase.

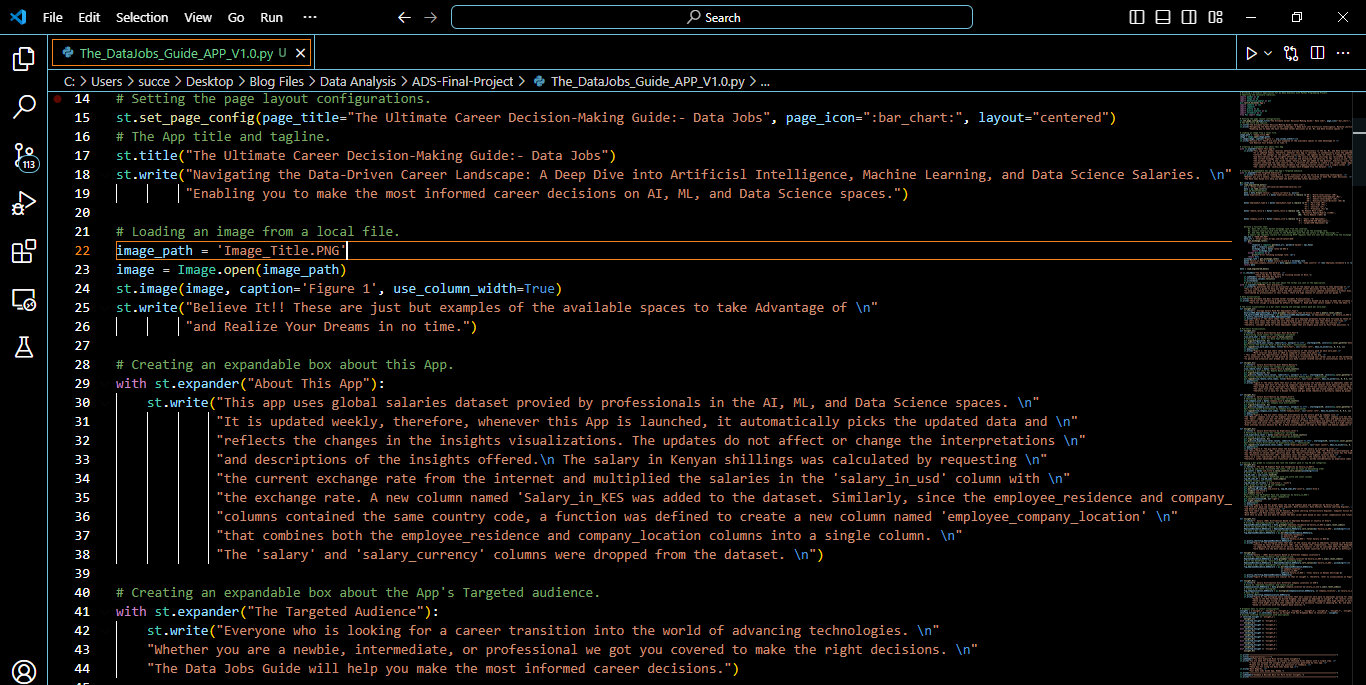

Step 2: Setting the page layout configurations

Next, I basically designed the application interface using the code snippet below. I defined and set the application name, loaded the image on the homepage, and created the “Targeted Audience” and “About the App” buttons.

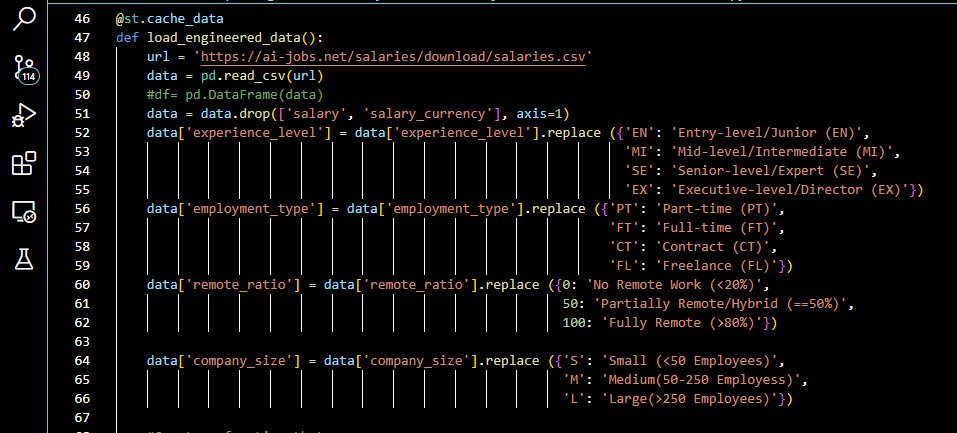

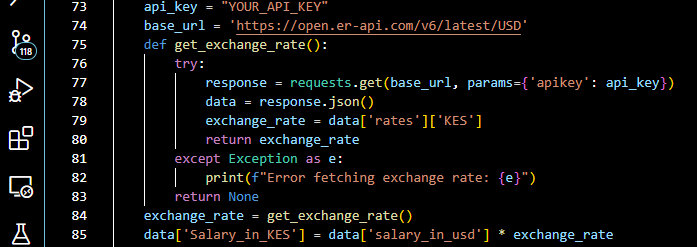

Step 3: Loading the engineered data.

After creating the web application layout, I proceeded to load the data along with the newly engineered features. The two code snippets below show how to load data in Streamlit and ensure that it remains the same every time it is loaded.

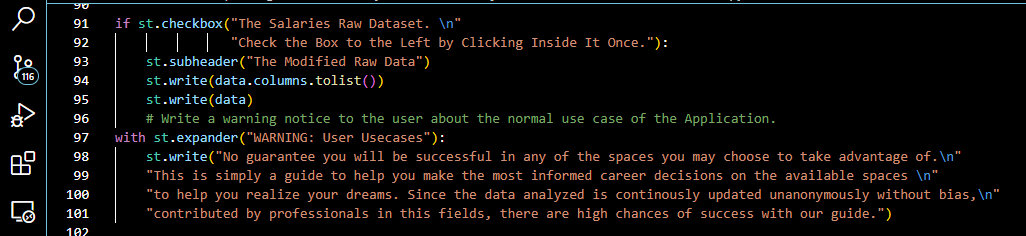

Step 4: Create the web application “Raw Data” & “Warning: User Cases” display buttons

The “Raw Data” button was designed to ensure a user can see the engineered dataset with the new features on the Web Application. Similarly, the “Warning: User Cases” button was created to warn the user about the application’s purpose and its limitations.

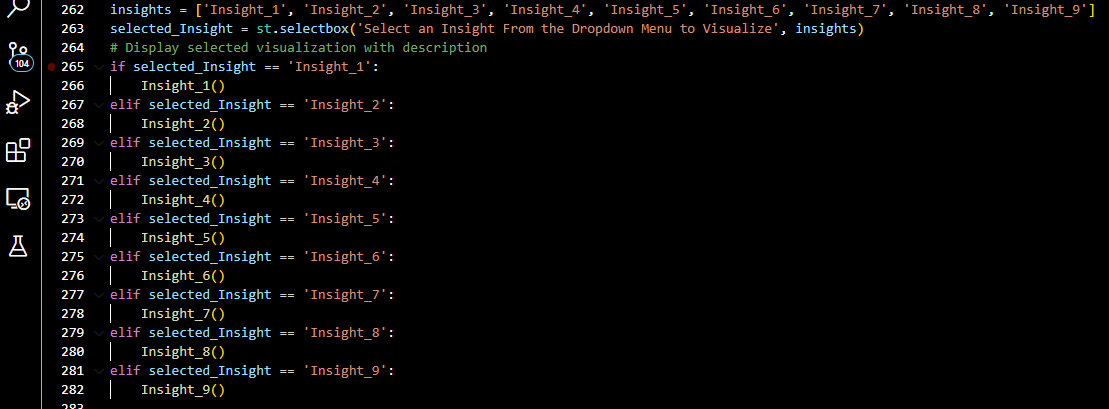

Step 5: Insights generation and presentation in the Web Application.

There were nine insights and recommendations. The code snippets below show how each insight was developed. Visit the application to view them by clicking the link given below.

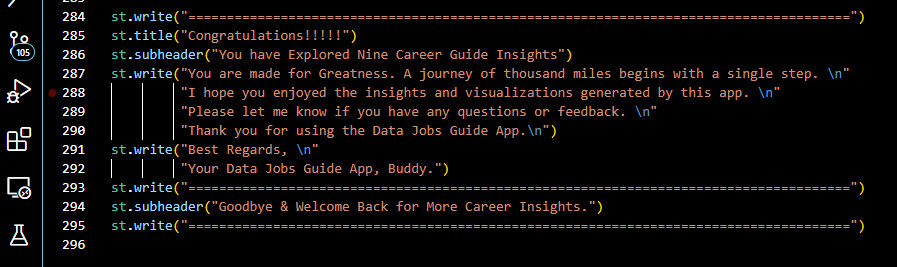

Step 6: Web application user appreciation note

After exploring the 9 insights, the summary section congratulates the App user. Additionally, it tells them what they have done and gained by using my application.

Finally, it bids them goodbye and welcomes them back to read more on the monthly updated insights.

Key analytical dimensions in the web application

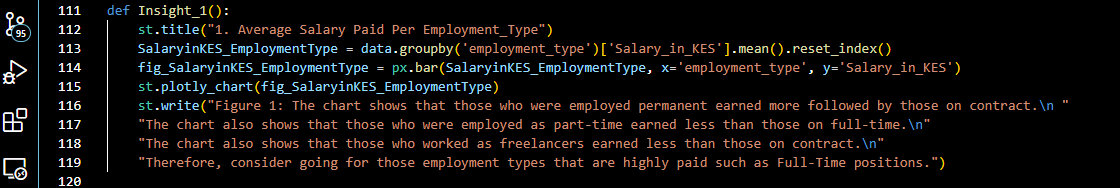

As listed below, based on project phase 1 data visualizations, I generated 9 insights. Each analytical dimension is accompanied by the code snippet developed to provide insight into the web application interface. After reading them, I recommend you click the link at the end of the post to visit the deployed application. As a result, you will confirm my great work with the Streamlit Library, which is a very simple tool that is available to you.

- Employment Type: Unraveling the nuances of salaries in full-time, part-time, contract, or freelance roles.

2. Work Years: Examining how salaries evolve over the years.

3. Remote Ratio: Assessing the influence of remote work arrangements on salaries.

4. Company Size: Analyzing the correlation between company size and compensation.

5. Experience Level: Understanding the impact of skill proficiency on earning potential.

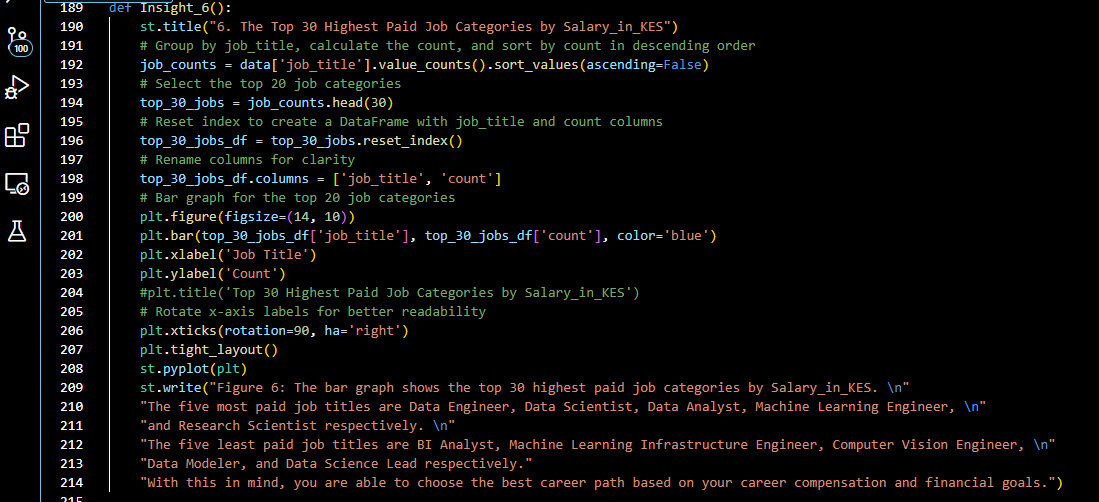

6. Highest Paid Jobs: Exploring which job category earns the most.

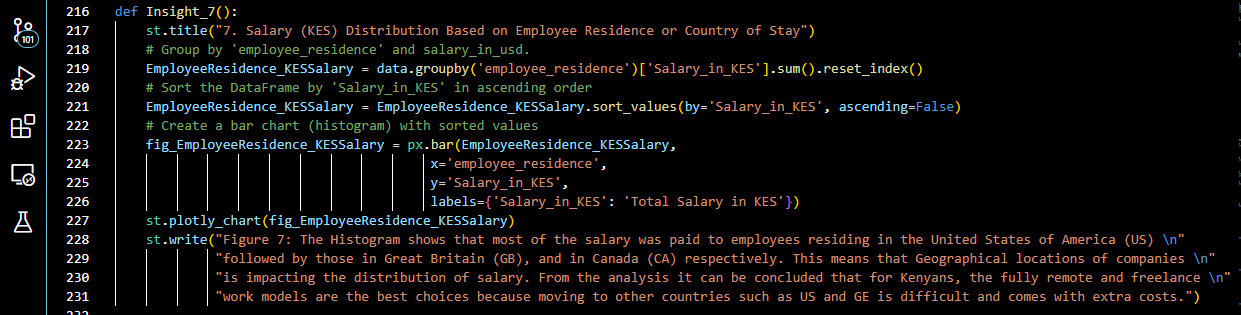

7. Employee Residence Impacts on Salary: Investigating the salary (KES) distribution based on the country where the employee resides.

8. Company Location: Investigating geographical variations in salary structures.

9. Salary_in_KES: Standardizing salaries to a common currency for cross-country comparisons.

Conclusion

In conclusion, by examining the critical analytical dimensions, the project seeks to provide a nuanced perspective on the diverse factors shaping salaries in the AI, ML, and Data Science sectors. Armed with these insights, individuals can navigate their career paths with a clearer understanding of the landscape, making strategic decisions that enhance their success in these dynamic and high-demand fields.

I recommend you access the guidelines with an open mind and not forget to read the user case warning. Do not wait any longer; access “The Ultimate Career Decision-Making Guide: – Data Jobs” web application and get the best insights.

The Streamlit Application Link: It takes you to the final application deployed using the Streamlit Sharing platform – Streamlit.io. https://career-transition-app-guide–data-jobs-mykju9yk46ziy4cagw9it8.streamlit.app/